OpenAI has recently produced a course called ‘Chat GPT Foundations for Educators’ which is designed to be a silver bullet to teach educators how to use AI. The course is the product of a partnership with Common Sense Media which is (usually) a reliable source of ‘common sense’ reviews. What they seem to be missing is how teachers can use AI effectively and purposefully. They are not really encouraging teachers to be co-designers, critical evaluators and engineers of content.

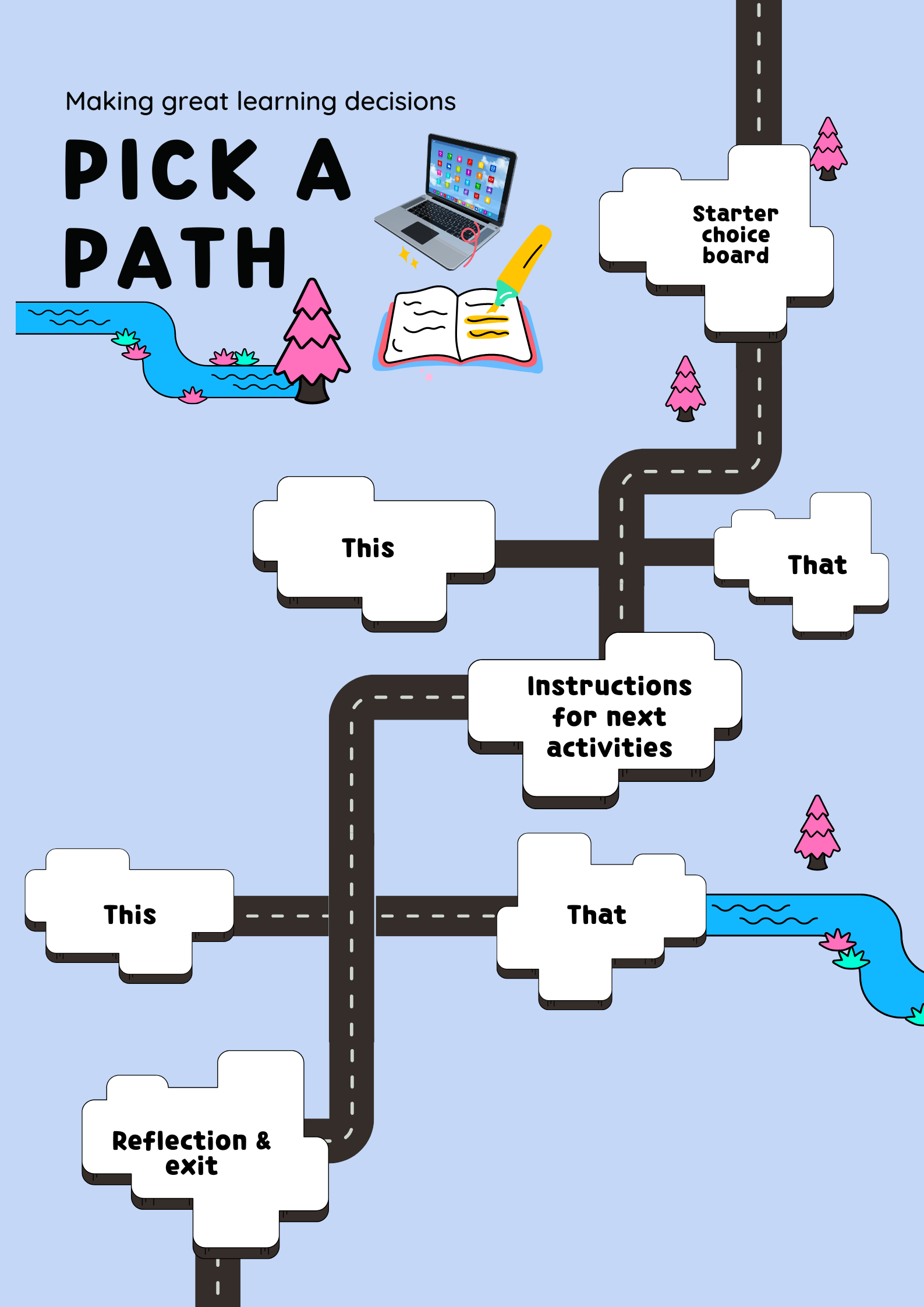

It basically falls short of practical (and not contradictory) use case scenarios and has omitted a lot of big picture questioning about the ‘why’ behind the use of AI. The current debate appears to be an assumption that teachers should choose between AI or the highway. But maybe this yes/no binary thinking is the real problem.

AI shouldn’t be an ‘all in’ or ‘all out’ or Yes/No debate. It is much more complicated than that.

If there were a fork in the road that was ‘AI or the highway’, choosing the highway (non AI) is (still) just fine. If you haven’t read about the OpenAI foundation course you can find a useful critique of it here: How does OpenAI Imagine K-12 Education by Erik Salvaggio.

Here are some of the key points of critique:

it assumes that teachers are passive producers of content

it posits that productivity is more important than effective pedagogy

it does not teach critical AI literacy

it does not target pedagogy

it is not a best practice example of UDL in action (no closed captioning)

it assumes that administrative tasks can all be automated

it does not seem to value the agency of teacher-owned creative processes in course design

it overstates the predictive capabilities of AI

and my addition:

it assumes that teachers need to be ‘all or nothing’ consumers

OpenAI seems to champion itself as a heroic solution to all of the problems that teachers have. It does not really dig into any additional problems that using AI blindly also produce.

If you stand with AI - then you obviously must use it for everything and save so much time and create so many more resources and power up your productivity to the point that even writing your own meeting agenda can be outsourced. But - quantity does not beat quality. And producing does not equate to creating.

When I was at Elam School of Fine Arts, my professor said to me (when I was churning out bad art at a rate of knots): ‘There’s enough sh*t in the world. Why contribute to it?” And I think this is golden advice that can be applied to finding your own position on AI in education.

An easy ‘out’ is to stand on the opposite road with those who say No. You might stand in solidarity with teachers who see AI as a flash in the pan that is best avoided. These teachers might be summed up as the ‘pen and paper warriors’ who want to make sure that text books are used instead of laptops. If you avoid technology, then you also do avoid a lot of the ‘bad’ things - but you also run the risk of stealing learning opportunities away from students who also need to learn critical AI literacy. Teachers have a duty to empower students to understand, question, and navigate AI responsibly. This isn't just about using the tools to enhance their own productivity but helping students to critique and control their own uses to be critical creators of the future.

If you want to start using AI in the classroom it is ok to do so cautiously. In fact, it is best to use any tool with your pedagogue hat on and ask all of the questions like ‘where is the science’, ‘how does this enhance learner experience’, ‘how does this increase critical thinking’, ‘how might this offer more agency’, ‘how might this remove barriers to learning’ etc. And if it doesn’t align to your lens of what education should be and needs to be in the future, then don’t use it. Or more simply put, if you are adding to the sh*t in the world, don’t.

AI, when used purposefully, has the power to enhance, augment and improve learning - but you have to become an active architect of learning and do so.

So what next? Thinking and Linking:

Prioritise Critical Literacy: Read up on AI’s limitations, biases, and ethical implications. Foster a culture of inquiry rather than blind adoption. Read widely or at least dip your toes in: 12 Best Blogs on AI

Focus on Inclusivity: Accessibility should be a baseline, not an afterthought. All training materials must meet diverse needs to ensure equitable learning and expand rather than restrict learning accessibility. Use AI to expand not restrict.

Balance Efficiency with Depth: Productivity should not come at the expense of the thoughtful, creative processes integral to teaching. AI should enhance, not overshadow, pedagogical engagement.

Collaborate and Innovate: Join a community of practice to join in the critical conversation (this AI Forum is really worthwhile. It has fortnightly recorded webinars and emailed transcripts for an easy win for those of us who might be time poor). Even if you don’t join a community of practice, you might share innovations, successes, and challenges with AI in education with your colleagues in-house.

Critique your Use: Think about process over product, learner agency and the experience for the learners above all. How is augmenting and enhancing learner experience? How is it supporting more critical thinking? Ask ethical questions: How does this tool support diverse learners in my classroom? What biases might the AI outputs carry, and how can I address them? Are the benefits worth the potential trade-offs in creativity or critical thinking? My work-in-progress rubric is below.

Become an engineer not a consumer: There are SO MANY new AI tools on the market right now with Chat GPT being just one drop in a vast ocean. Popular educational solutions like MagicSchool.AI can create educational consumables in seconds, but the outputs might not be of true benefit to students’ experience. Consider how you might engineer your own more purposeful solutions rather than accepting ready-made products that might push passivity or feed another tech company’s coffers.

Explore Innovation: For some interesting use cases for how to innovate with AI in the classroom check out Harvards’s AI Pedagogy Project (this was also mentioned in the first blog link).

Put Pedagogy over Product: AI tools are only as effective as the intentionality behind their use. Targeting strategies like flipped classrooms, differentiation, UDL or gamified learning means that you can apply AI to pedagogical frameworks purposefully.

I created this rubric (work in progress) based on the ITL Microsoft Partners in learning rubrics. There are more categories in the rubric - but this is the first page as an example.

AI might not be a silver bullet or a magic solution, but neither is it a storm to be feared.

It’s not really an “AI or the highway” scenario, forcing a binary choice of ‘this or that.’ AI is simply a tool, and like any tool, its value lies entirely in how we use it. By asking critical questions, exploring practical use cases, and fostering collaboration, we can move beyond the ‘yes or no’ debate. Instead, we can become thoughtful, critical users who forge our own purposeful path forward—together.

I added this as a provocation - does the SAMR rubric work when considering AI? SAMR rubric by Puentedura adapted for AI use.

Thoughts? Questions? Leave a comment to share your thoughts.